On This Page:ToggleTypesSummaryLearning CheckExperiment Terminology

On This Page:Toggle

On This Page:

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Types

1. Independent Measures

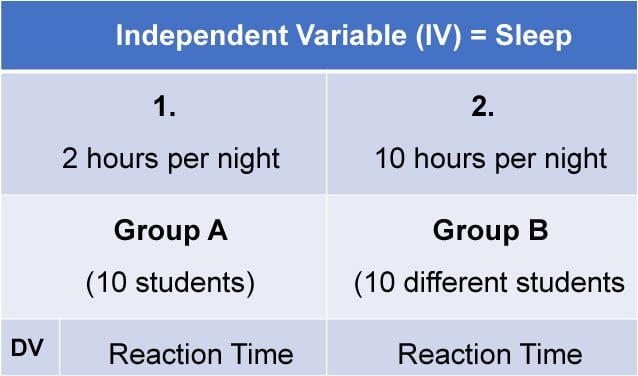

Independent measures design, also known asbetween-groups, is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

Independent measures involve using two separate groups of participants, one in each condition. For example:

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups orwithin-subjects design.

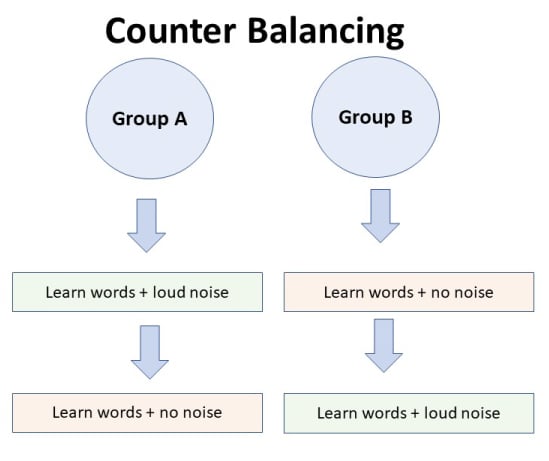

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

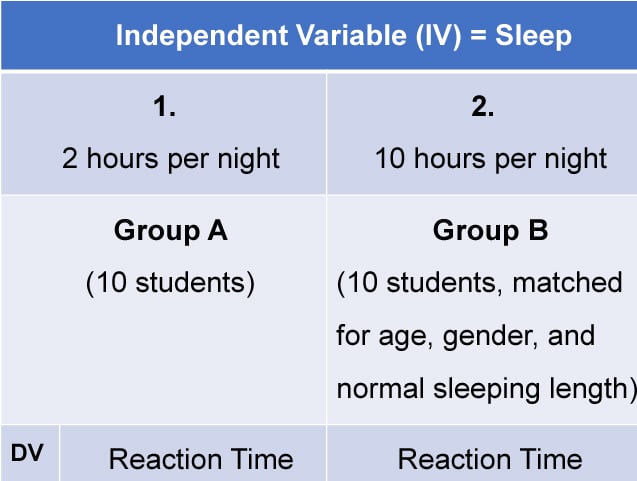

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into thecontrol group.

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

Summary

Experimental designrefers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1.Independent measures / between-groups: Different participants are used in each condition of the independent variable.

2.Repeated measures /within groups: The same participants take part in each condition of the independent variable.

3.Matched pairs: Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validityThe degree to which an investigation represents real-life experiences.

Ecological validity

The degree to which an investigation represents real-life experiences.

Experimenter effectsThese are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristicsThe clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)All variables which are not independent variables but could affect the results (DV) of the experiment.Extraneous variablesshould be controlled where possible.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment.Extraneous variablesshould be controlled where possible.

Confounding variablesVariable(s) that have affected the results (DV), apart from the IV. Aconfounding variablecould be an extraneous variable that has not been controlled.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. Aconfounding variablecould be an extraneous variable that has not been controlled.

Random AllocationRandomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.The principle ofrandom allocationis to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.The principle ofrandom allocationis to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle ofrandom allocationis to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effectsChanges in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

![]()

Olivia Guy-Evans, MSc

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

Saul McLeod, PhD

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.